Basic Information

Social

Publications

Authors:Deheng Zhang*, Jingyu Wang*, Shaofei Wang, Marko Mihajlovic, Sergey Prokudin, Hendrik P.A. Lensch, Siyu Tang (*equal contribution)

We present RISE-SDF, a method for reconstructing the geometry and material of glossy objects while achieving high-quality relighting.Authors:Marko Mihajlovic, Sergey Prokudin, Siyu Tang, Robert Maier, Federica Bogo, Tony Tung, Edmond Boyer

SplatFields regularizes 3D gaussian splats for sparse 3D and 4D reconstruction.Authors:Xiyi Chen , Marko Mihajlovic , Shaofei Wang , Sergey Prokudin , Siyu Tang

We introduce a morphable diffusion model to enable consistent controllable novel view synthesis of humans from a single image. Given a single input image and a morphable mesh with a desired facial expression, our method directly generates 3D consistent and photo-realistic images from novel viewpoints, which we could use to reconstruct a coarse 3D model using off-the-shelf neural surface reconstruction methods such as NeuS2.Authors:Yan Zhang, Sergey Prokudin, Marko Mihajlovic, Qianli Ma, Siyu Tang

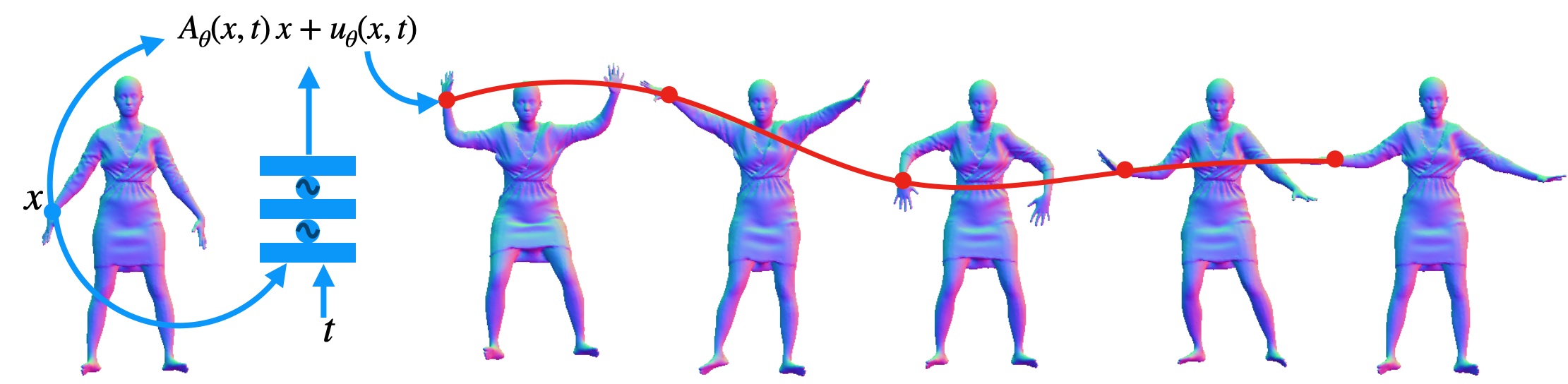

DOMA is an implicit motion field modeled by a spatiotemporal SIREN network. The learned motion field can predict how novel points move in the same field.ResFields: Residual Neural Fields for Spatiotemporal Signals

Conference: International Conference on Learning Representations (ICLR 2024) spotlight presentation

Authors:Marko Mihajlovic, Sergey Prokudin, Marc Pollefeys, Siyu Tang

ResField layers incorporates time-dependent weights into MLPs to effectively represent complex temporal signals.Authors:Sergey Prokudin, Qianli Ma, Maxime Raafat, Julien Valentin, Siyu Tang

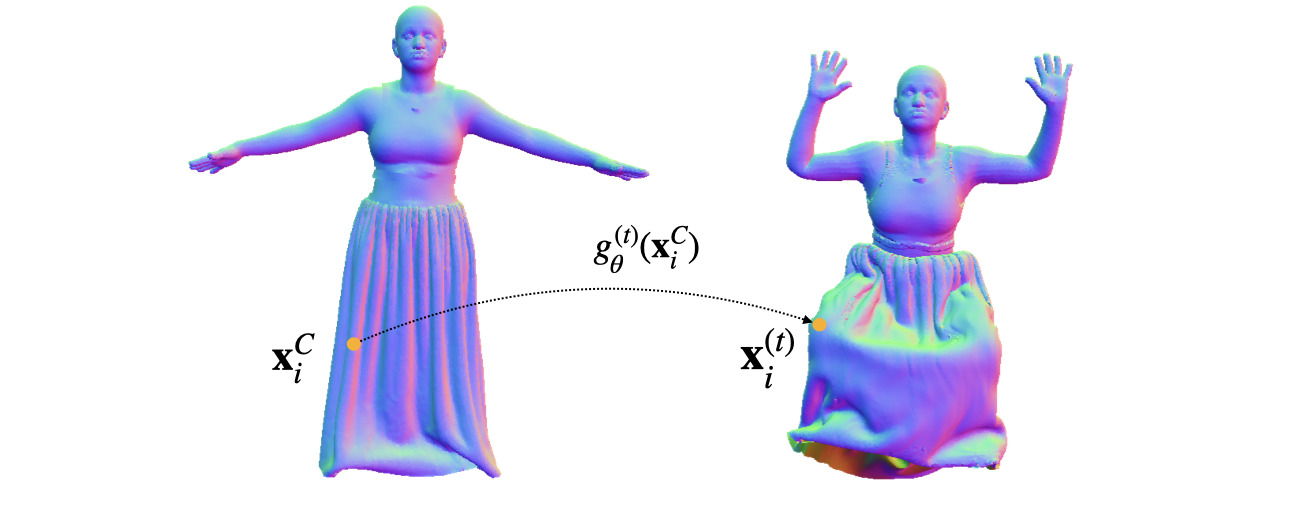

We propose to model dynamic surfaces with a point-based model, where the motion of a point over time is represented by an implicit deformation field. Working directly with points (rather than SDFs) allows us to easily incorporate various well-known deformation constraints, e.g. as-isometric-as-possible. We showcase the usefulness of this approach for creating animatable avatars in complex clothing.Authors:Korrawe Karunratanakul, Sergey Prokudin, Otmar Hilliges, Siyu Tang

We present HARP (HAnd Reconstruction and Personalization), a personalized hand avatar creation approach that takes a short monocular RGB video of a human hand as input and reconstructs a faithful hand avatar exhibiting a high-fidelity appearance and geometry.